June 13, 2023

Amid the wave of ChatGPT euphoria flooding the market, in the workplace feelings vary considerably based on employee seniority.

While those at the top are far more positive about the promise of artificial intelligence (AI), frontline employees find it significantly more worrisome.

According to new research by Boston Consulting Group (BCG) – AI at Work: What People Are Saying – boardroom enthusiasm is not being matched on the company floor, highlighting a sizeable disconnection between leadership and employees.

A disconnection that should be taken seriously given this is a survey of more than 12,800 employees from the executive suite to the front lines, spanning 18 countries and multiple industries across the world.

Specifically, 62% of leaders are optimistic about AI while only 42% of frontline employees share that view. Within that context, 36% of employee think that their job is likely to be eliminated as a consequence of the technology capturing the corporate imagination.

To prepare for the new era of AI at work, 86% of employees now recognise the need for training to sharpen skills. Only 14% of frontline employees have currently received up-skilling training to date however, compared with 44% of C-suite executives.

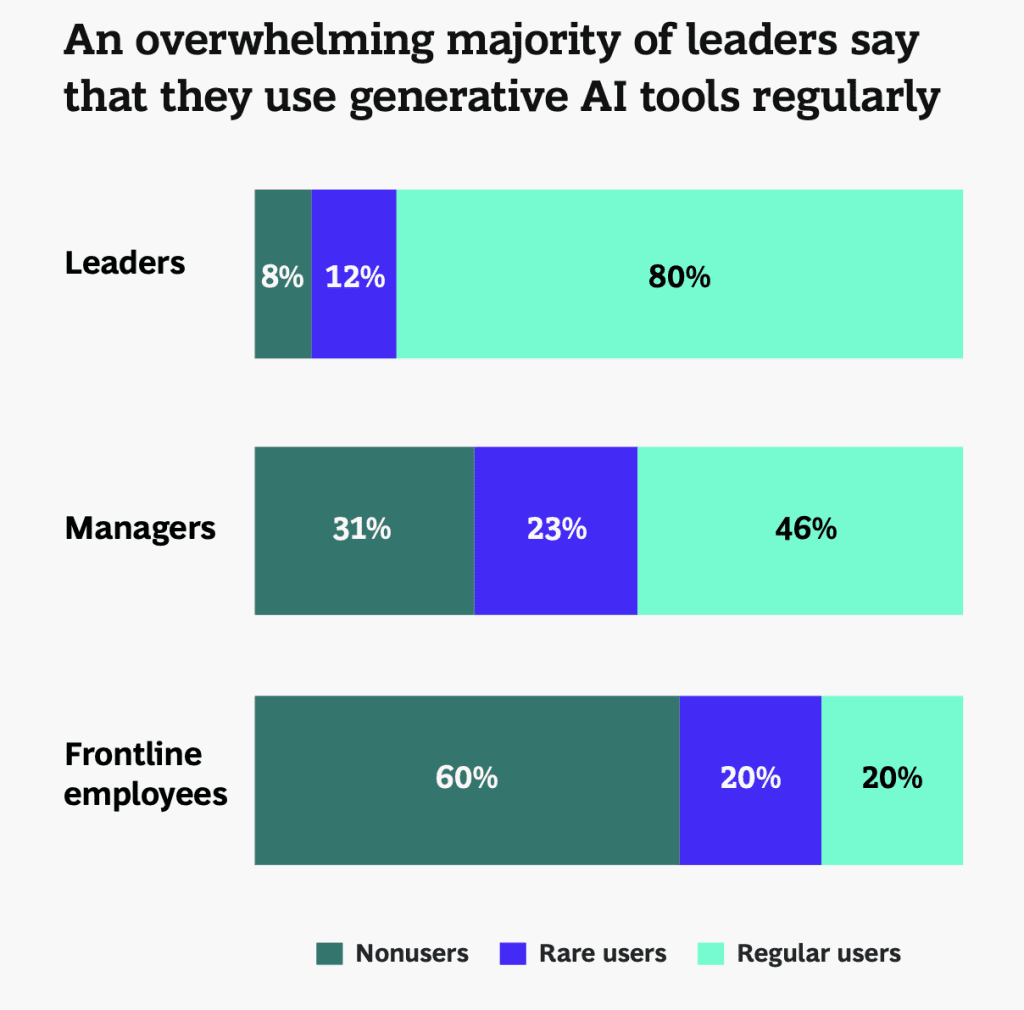

The gap continues to widen with 80% of leaders labelled as “regular users” of generative AI tools, considered to be utilising the technology on at least a weekly basis. Meanwhile, only 20% of frontline workers fall into that category with a notable 60% considered as “non-users”.

“The companies that capture the most value from AI follow the 10-20-70 rule,” explained Vinciane Beauchene, Managing Director and Partner at BCG.

“10% of their AI effort goes to designing algorithms, 20% to building the underlying technologies, and 70% to supporting people and adapting business processes.”

Based on the data, it’s no surprise that senior leaders – who are more frequent users of generative AI and receive greater access to training – are more optimistic and less concerned compared to frontline employees.

“Up-skilling is essential and must be done continuously,” Beauchene added. “It should go beyond learning how to use the technology and allow employees to adapt in their role as activities and skill requirements evolve.”

Regulation and responsibility top of mind

Despite staff concerns, 71% of employees recognise that the rewards of generative AI do outweigh the risks.

But acceptance comes with acknowledgement that such risks must be managed – 79% of respondents believe that AI-specific regulations are “necessary”, representing a marked shift in attitudes toward government oversight of technology.

“Rather than waiting for government regulation to be enacted, many companies are developing and deploying their own responsible AI frameworks to manage this powerful emerging technology in a way that aligns with organisational purpose and ethical values,” the BCG report stated.

But employee views on the effectiveness of these programs vary widely.

While 68% of leaders feel confident about their organisation’s responsible use of AI, just 29% of frontline employees believe their companies have implemented adequate measures to ensure AI is used responsibly.

“The level of concern among employees about the responsible use of AI is striking,” added Steven Mills, Chief AI Ethics Officer at BCG.

“Generative AI burst on the scene so abruptly in 2022 that many companies are still playing catch up, however responsible AI should be a priority for all leaders.”

Despite 52% of all employees ranking optimism as one of their top two sentiments – a 17-point jump from 2018 when this survey was last conducted – support for the use of generative AI by organisations continues to be conditional.

“Companies won’t achieve the full potential of generative AI if the majority of their employees continue to doubt their employer is using AI responsibly,” Mills warned.

“Responsible AI doesn’t just mitigate risk, it can also increase innovation and productivity, and generate value and competitive advantage for organisations.”

In short, Mills said employees are ready to accept AI in the workplace but only if they are comfortable that their employer is committed to “doing the right thing”.

The report outlined three key recommendations for leaders:

Inform your opinion with executive guidance, in-depth analysis and business commentary.